I’m excited to share a major leap forward in how we develop and deploy AI. In collaboration with NVIDIA, we’ve integrated NVIDIA NIM microservices and NVIDIA AgentIQ toolkit into Azure AI Foundry—unlocking unprecedented efficiency, performance, and cost optimization for your AI projects.

A new era of AI efficiency

In today’s fast-paced digital landscape, scaling AI applications demands more than just innovation—it requires streamlined processes that deliver rapid time-to-market without compromising on performance. With enterprise AI projects often taking 9 to 12 months to move from conception to production, every efficiency gain counts. Our integration is designed to change that by simplifying every step of the AI development lifecycle.

NVIDIA NIM on Azure AI Foundry

NVIDIA NIM™, part of the NVIDIA AI Enterprise software suite, is a suite of easy-to-use microservices engineered for secure, reliable, and high-performance AI inferencing. Leveraging robust technologies such as NVIDIA Triton Inference Server™, TensorRT™, TensorRT-LLM, and PyTorch, NIM microservices are built to scale seamlessly on managed Azure compute.

They provide:

- Zero-configuration deployment: Get up and running quickly with out-of-the-box optimization.

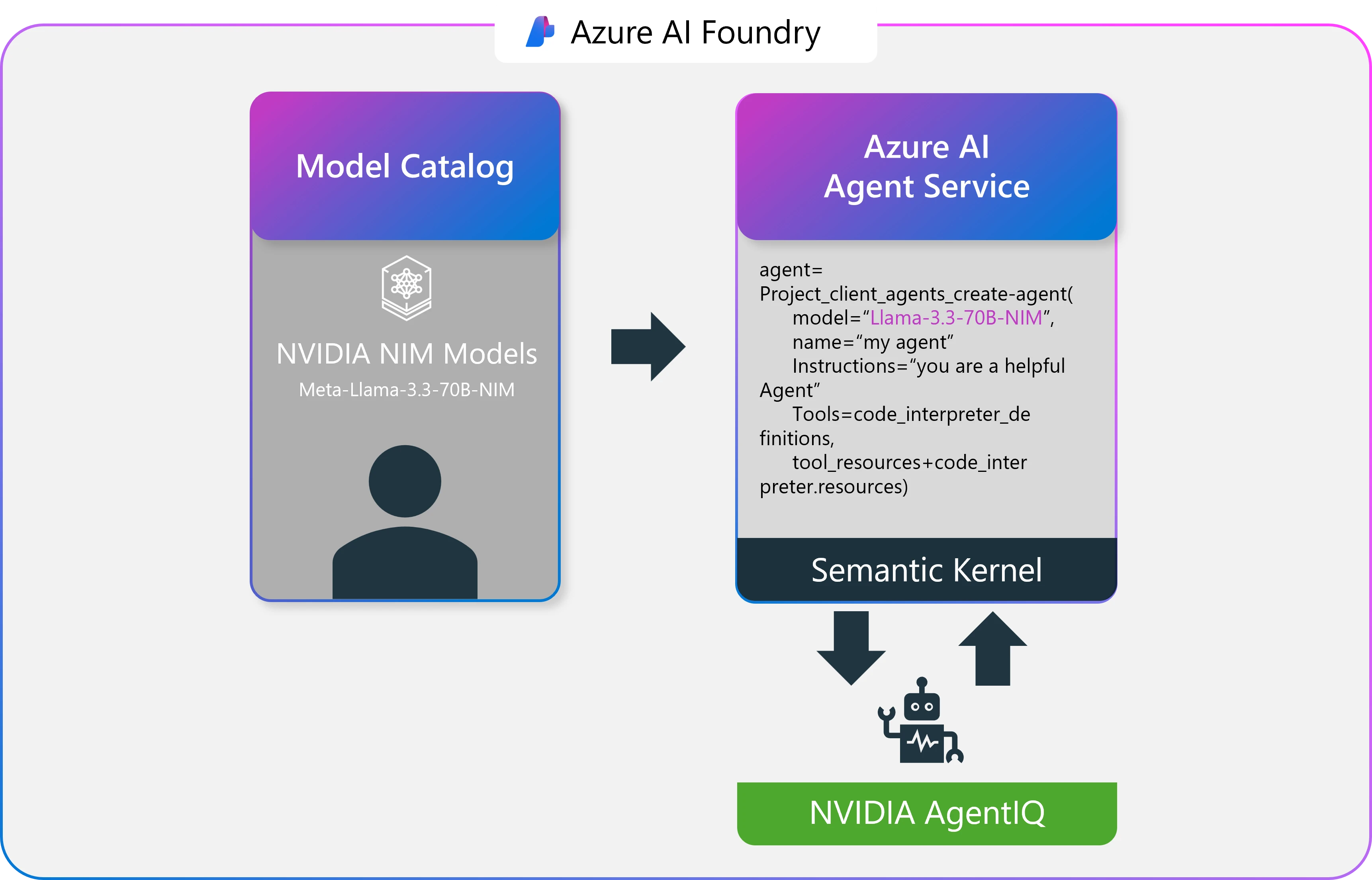

- Seamless Azure integration: Works effortlessly with Azure AI Agent Service and Semantic Kernel.

- Enterprise-grade reliability: Benefit from NVIDIA AI Enterprise support for continuous performance and security.

- Scalable inference: Tap into Azure’s NVIDIA accelerated infrastructure for demanding workloads.

- Optimized workflows: Accelerate applications ranging from large language models to advanced analytics.

Deploying these services is simple. With just a few clicks—whether selecting models like the Llama-3.3-70B-NIM or others from the model catalog in Azure AI Foundry—you can integrate them directly into your AI workflows and start building generative AI applications that work flawlessly within the Azure ecosystem.

Optimizing performance with NVIDIA AgentIQ

Once your NVIDIA NIM microservices are deployed, NVIDIA AgentIQ takes center stage. This open-source toolkit is designed to seamlessly connect, profile, and optimize teams of AI agents, enables your systems to run at peak performance. AgentIQ delivers:

- Profiling and optimization: Leverage real-time telemetry to fine-tune AI agent placement, reducing latency and compute overhead.

- Dynamic inference enhancements: Continuously collect and analyze metadata—such as predicted output tokens per call, estimated time to next inference, and expected token lengths—to dynamically improve agent performance.

- Integration with Semantic Kernel: Direct integration with Azure AI Foundry Agent Service further empowers your agents with enhanced semantic reasoning and task execution capabilities.

This intelligent profiling not only reduces compute costs but also boosts accuracy and responsiveness, so that every part of your agentic AI workflow is optimized for success.

In addition, we will soon be integrating the NVIDIA Llama Nemotron Reason open reasoning model. NVIDIA Llama Nemotron Reason is a powerful AI model family designed for advanced reasoning. According to NVIDIA, Nemotron excels at coding, complex math, and scientific reasoning while understanding user intent and seamlessly calling tools like search and translations to accomplish tasks.

Real-world impact

Industry leaders are already witnessing the benefits of these innovations.

Drew McCombs, Vice President, Cloud and Analytics at Epic, noted:

The launch of NVIDIA NIM microservices in Azure AI Foundry offers a secure and efficient way for Epic to deploy open-source generative AI models that improve patient care, boost clinician and operational efficiency, and uncover new insights to drive medical innovation. In collaboration with UW Health and UC San Diego Health, we’re also researching methods to evaluate clinical summaries with these advanced models. Together, we’re using the latest AI technology in ways that truly improve the lives of clinicians and patients.

Epic’s experience underscores how our integrated solution can drive transformational change—not just in healthcare but across every industry where high-performance AI is a game changer. As noted by Jon Sigler, EVP, Platform and AI at ServiceNow:

This combination of ServiceNow’s AI platform with NVIDIA NIM and Microsoft Azure AI Foundry and Azure AI Agent Service helps us bring to market industry-specific, out-of-the-box AI agents, delivering full-stack agentic AI solutions to help resolve problems faster, deliver great customer experiences, and accelerate improvements in organizations’ productivity and efficiency.

Unlock AI-powered innovation

By combining the robust deployment capabilities of NVIDIA NIM with the dynamic optimization of NVIDIA AgentIQ, Azure AI Foundry provides a turnkey solution for building, deploying, and scaling enterprise-grade agentic applications. This integration can accelerate AI deployments, enhance agentic workflows, and reduce infrastructure costs—enabling you to focus on what truly matters: driving innovation.

Ready to accelerate your AI journey?

Deploy NVIDIA NIM microservices and optimize your AI agents with NVIDIA AgentIQ toolkit on Azure AI Foundry. Explore more about the Azure AI Foundry model catalog.

Let’s build a smarter, faster, and more efficient future together.

The post Accelerating agentic workflows with Azure AI Foundry, NVIDIA NIM, and NVIDIA AgentIQ appeared first on Microsoft Azure Blog.