At Microsoft, we believe the future of AI is being built right now across the cloud, on the edge and on Windows. Windows is, and will remain, an open platform that empowers developers to do their best work, offering the ultimate flexibility.

Our north star is clear: to make Windows the best platform for developers, built for this new era of AI, where intelligence is integrated across software, silicon and hardware. From working with Windows 11 on the client to Windows 365 in the cloud, we’re building to support a broad range of scenarios, from AI development to core IT workflows, all with a security-first mindset.

Over the past year, we’ve spent time listening to developers, learning what they value most, and where we have opportunities to continue to make Windows an even better dev box, particularly in this age of AI development. That feedback has shaped how we think about the Windows developer platform and the updates we’re introducing today.

What’s new for Windows at Build:

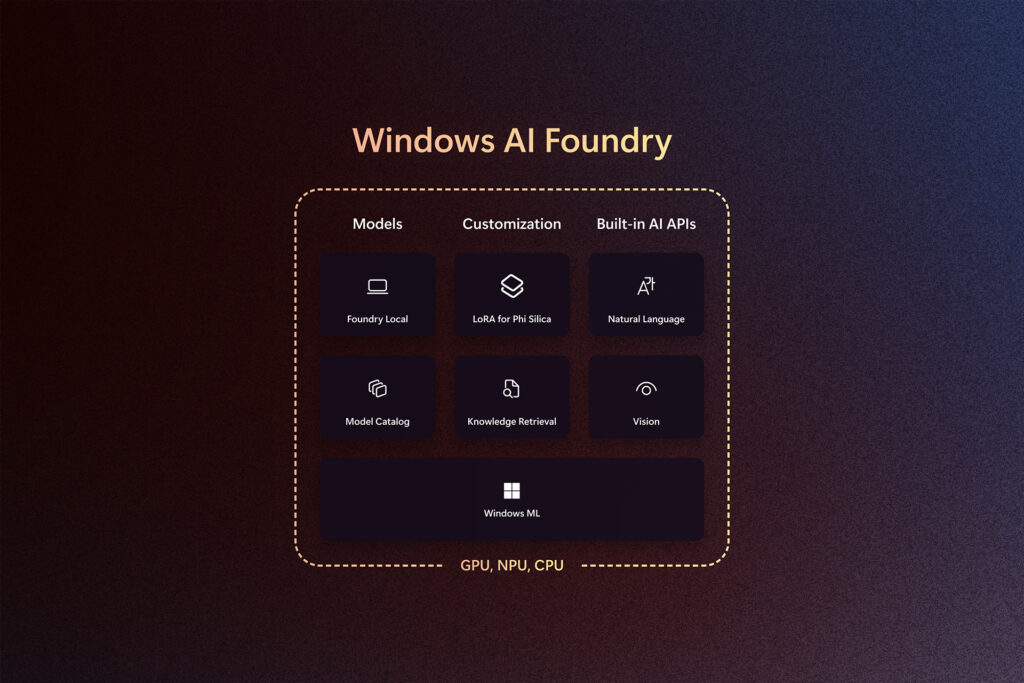

- Windows AI Foundry, an evolution of Windows Copilot Runtime, offers a unified and reliable platform supporting the AI developer lifecycle from model selection, optimization, fine-tuning and deployment across client and cloud. Windows AI Foundry includes several capabilities:

- Windows ML is the foundation of our AI platform and the built-in AI inferencing runtime on Windows. This enables developers to bring their own models and deploy them efficiently across the silicon partner ecosystem including – AMD, Intel, NVIDIA and Qualcomm spanning CPU, GPU, NPU.

- Windows AI Foundry integrates Foundry Local and other model catalogs like Ollama and NVIDIA NIMs, offering developers quick access to ready-to-use open-source models on diverse Windows silicon. This offers the ability for developers to browse, test, interact and deploy models in their local apps.

- In addition, Windows AI Foundry offers ready-to-use AI APIs that are powered by Windows inbox models on Copilot+ PCs for key language and vision tasks, like text intelligence, image description, text recognition, custom prompt and object erase. We are announcing new capabilities like LoRA (low-rank-adaption) for finetuning our inbox SLM, Phi Silica, with custom data. We are also announcing new APIs for semantic search and knowledge retrieval so developers can build natural language search and RAG (retrieval-augmented generation) scenarios in their apps with their custom data.

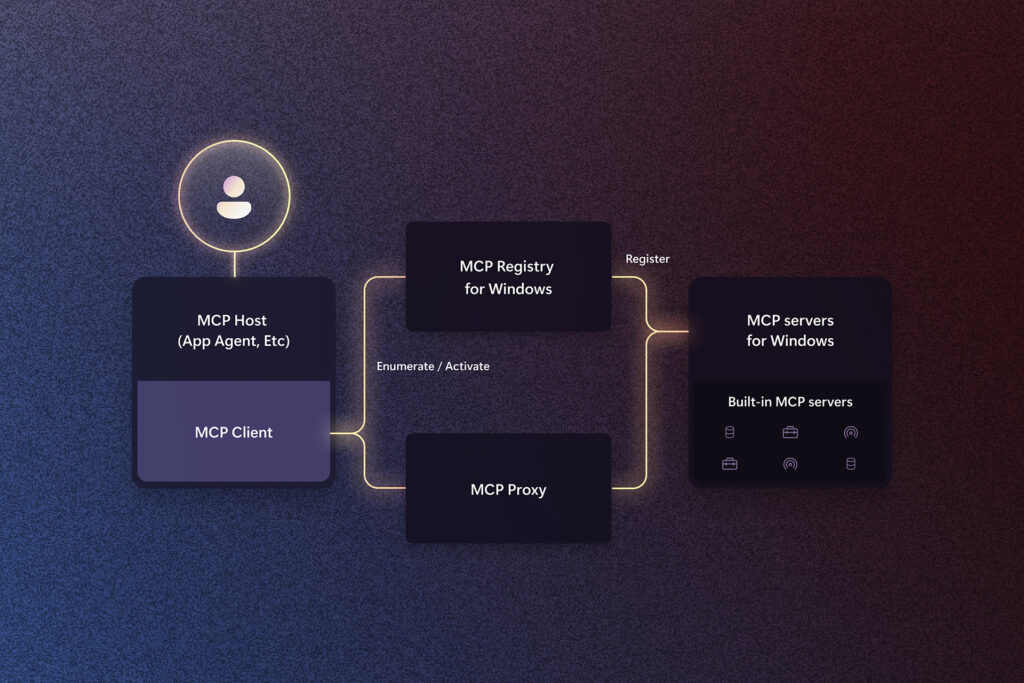

- Evolving Windows 11 for the agentic future with native support for Model Context Protocol (MCP). MCP integration with Windows will offer a standardized framework for AI agents to connect with native Windows apps, enabling apps to participate seamlessly in agentic interactions. Windows apps can expose specific functionality to augment the skills and capabilities of agents installed locally on a Windows PC. This will be available in a private developer preview with select partners in the coming months to begin garnering feedback.

- App Actions on Windows, a new capability for app developers to build actions for specific features in their apps and increase discoverability, unlocking new entry points for developers to reach new users.

- New Windows security capabilities like the Virtualization Based Security (VBS) Enclave SDK and post-quantum cryptography (PQC) give developers additional tools that make it easier to develop secure solutions as the threat landscape continues to evolve.

- Open sourcing Windows Subsystem for Linux (WSL), inviting developers to contribute, customize and help us integrate Linux more seamlessly into Windows.

- New improvements to popular Windows Developer tools including Terminal, WinGet and PowerToys enable developers to increase their productivity and focus on what they do best – code.

- New Microsoft Store growth features now include free developer registration, Web Installer for Win32 apps, Analytics reports, App Campaign program, and more to help app developers grow user acquisition, discovery and engagement on Windows.

Windows AI Foundry

We want to democratize the ability for developers to build, experiment and reach users with breakthrough AI experiences. We have heard from developers who are just getting started with AI development that they prefer off-the-shelf solutions for task specific capabilities to accelerate AI integration in apps. Developers also told us they need an easy way to browse, test and integrate open-source models in their apps. Advanced developers building their own models told us they prefer fast and capable solutions to deploy models across diverse silicon efficiently. To cater to various development needs we evolved Windows Copilot Runtime to be Windows AI Foundry, which offers many powerful capabilities. Learn more.

Developers can more easily access ready-to-use open-source models

Windows AI Foundry integrates models from Foundry Local and other model catalogs like Ollama and NVIDIA NIMs, offering developers quick access to ready-to-use open-source models on diverse Windows silicon. With Foundry Local model catalog, we have done the heavy-lifting of optimizing these models across CPUs, GPUs and NPUs making them ready-to-use instantly.

During preview, developers can access Foundry Local by installing from WinGet (winget install Microsoft.FoundryLocal) and the Foundry Local CLI to browse, download and test models. Foundry Local will automatically detect device hardware (CPU, GPU and NPU) and list compatible models for developers to try. Developers can also leverage the Foundry Local SDK to easily integrate Foundry Local in their app. We will make these capabilities available directly in Windows 11 and Windows App SDK in the coming months, optimizing the developer experience in shipping production apps using Foundry Local.

While we offer ready-to-use open-source models, we have a growing number of developers building their own models and bringing breakthrough experiences to end-users. Windows ML is the foundation of our AI platform and is the built-in AI inferencing runtime, offering simplified and efficient model deployment across CPUs, GPUs and NPUs.

Windows ML is a high-performance local inference runtime built directly into Windows to simplify shipping production applications with open source or proprietary models including our own Copilot+ PC experiences. It was built from the ground up to optimize for model performance and agility and to respond to the speed of innovation in model architectures, operators and optimizations across all layers of the stack. Windows ML is an evolution of DirectML (DML) based on our learnings from the past year, listening to feedback from many developers, our silicon partners and our own teams developing AI experiences for Copilot+ PCs. Windows ML is designed with this feedback in mind, empowering our silicon partners – AMD, Intel, NVIDIA, Qualcomm – to leverage the execution provider contract to optimize for model performance, and match the pace of innovation.

Windows ML offers several benefits:

Simplified Deployment: Enables developers to ship production applications without needing to package ML runtimes, hardware execution providers or drivers with their app. Windows ML detects the hardware on client devices, pulls down the appropriate execution providers and selects the right one for inference based on developer provided configuration.

Automatically adapts to future generations of AI hardware: Windows ML enables developers to build AI apps confidently even in a fast-evolving silicon ecosystem. As new hardware becomes available, Windows ML keeps all required dependencies up to date and adapts to new silicon while maintaining model accuracy and hardware compatibility.

Tools to prepare and ship performant models: Robust tools included in AI Toolkit for VS Code for various tasks from model conversion, quantization to optimization simplifying the process of preparing and shipping performant models all in one place.

We are working closely with all our silicon partners – AMD, Intel, NVIDIA, Qualcomm – to integrate their execution providers seamlessly with Windows ML to provide the best model performance for their specific silicon.

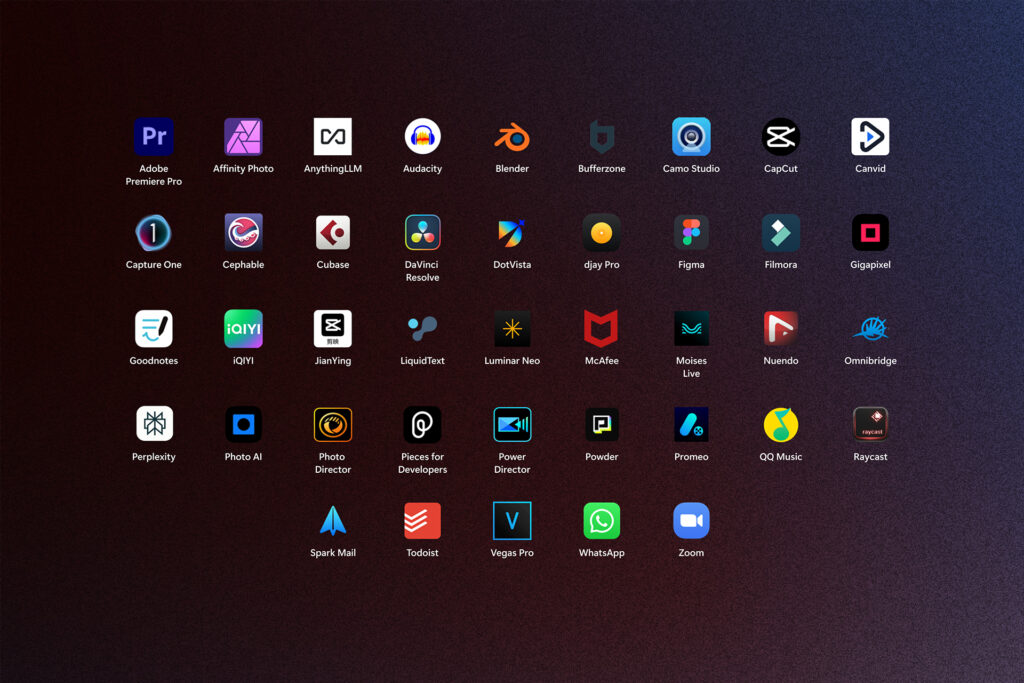

Many app developers like Adobe, Bufferzone, McAfee, Reincubate, Topaz Labs, Powder and Wondershare are already working with us to leverage Windows ML to deploy models across AMD, Intel, NVIDIA and Qualcomm silicon. To learn more about Windows ML, visit this blog.

Integrate AI using APIs powered by Windows built-in models quickly and easily

We are offering ready-to-use AI APIs powered by Windows inbox models for key tasks like text intelligence and image processing. These include language APIs like text summarization and rewrite, and vision APIs like image description, text recognition (OCR), image super resolution and image segmentation, all available in stable version in the latest release of Windows App SDK 1.7.2. These APIs remove the overhead of model building or deployment. These APIs run locally on the device and help provide privacy, security and compliance at zero additional cost and are optimized for NPUs on Copilot+ PCs. App developers like Dot Vista, Filmora by Wondershare, Pieces for Developers, Powder, iQIYI and more are already leveraging our ready-to-use AI APIs in their apps.

We also heard from developers they need to fine-tune LLMs with their custom data to get the required output for specific scenarios. Many also expressed that fine-tuning the base model is a difficult task. That’s why we’re announcing LoRA (low-rank-adaption) support for Phi Silica.

Introducing LoRA (low-rank-adaption) for Phi Silica to fine-tune our in-built SLM with custom data

LoRA makes fine-tuning more efficient by updating only a small subset of parameters of the model with custom data. This allows improved performance on desired tasks without affecting the model’s overall abilities. This is available in public preview starting today on Snapdragon X Series NPUs, and will be available on Intel and AMD Copilot+ PCs in the coming months. Developers can access LoRA for Phi Silica in Windows App SDK 1.8 Experimental 2. Learn more.

Developers can get started with LoRA training for Phi Silica in AI Toolkit for VS Code. Choose the Fine-tuning tool, select the Phi Silica model, configure project, and kick off the training in Azure with custom data set. Once the training is complete developers can download the LoRA adapter, use it on top of the Phi Silica API and experiment to see the difference in responses with the LoRA adapter and without it.

Introducing semantic search and knowledge retrieval for LLMs

We are introducing new semantic search APIs to help developers to create powerful search experiences using their own app data. These APIs power both semantic search (search by meaning, including image search) and lexical search (search by exact words), giving users more intuitive and flexible ways to find what they need.

These search APIs run locally on all device types and offer seamless performance and privacy. On Copilot+ PCs, semantic capabilities are enabled for premium experience.

Beyond traditional search, these APIs also support RAG (retrieval-augmented-generation), enabling developers to ground LLM output with their own custom data.

These APIs are available in private preview today. Sign up here to get early access.

In summary, Windows AI Foundry offers a host of capabilities for developers, meeting them where they are on their AI journey. It offers ready-to-use APIs powered by in-built models, tools to customize Windows in-built models, and a high-performance inference runtime to help developers bring their own models and deploy them across silicon. With Foundry Local integration in Windows AI Foundry, developers also get access to a rich catalog of open-source models.

We are pleased to celebrate our incredible developer community building experiences using on-device AI on Windows 11 today and we can’t wait to see what else developers will build using these rich capabilities offered in Windows AI Foundry.

Introducing native support for Model Context Protocol (MCP) to power the agentic ecosystem on Windows 11

As the world moves toward an agentic future, Windows is evolving to deliver the tools, capabilities and security paradigms for agents to operate in and augment their skills to deliver meaningful value to customers.

The MCP platform on Windows will offer a standardized framework for AI agents to connect with native Windows apps, which can expose specific functionality to augment the skills and capabilities of those agents on Windows 11 PC. This infrastructure will be available in a private developer preview with select partners in the coming months to begin garnering feedback.

Security and privacy first: With new MCP capabilities, we recognize that we’ll be learning as we continue to expand MCP and other agentic capabilities, and our top priority is to ensure we’re building upon a secure foundation. Below are some of the principles guiding our responsible development of MCP on Windows 11:

- We’re committed to making the MCP Registry for Windows an ecosystem of trustworthy MCP servers that meet strong security baseline criteria.

- User control is a guiding principle as we develop this integration. Agents’ access to MCP servers is turned off by default. Once enabled, all sensitive actions performed by the agent on behalf of the user will be auditable and transparent.

- MCP server access will be governed by the principle of least privilege, enforced through declarative capabilities and isolation (where applicable), ensuring that the user is in control of what privileges are granted to an MCP server and helping limit the impact of any attacks on any specific server.

Security is not a one-time feature—it’s a continuous commitment. As we expand MCP and other agentic capabilities, we will continue to evolve our defenses. To learn more about the security approach, visit Securing the Model Context Protocol: Building a safe agentic future on Windows.

We are introducing the below components in the MCP platform on Windows:

MCP Registry for Windows: This is the single, secure and trustworthy source to make MCP servers accessible to AI agents on Windows. Agents can discover the installed MCP servers on client devices via the MCP Registry for Windows, leverage their expertise and offer meaningful value to end-users.

MCP Servers for Windows: This will include Windows system functionalities like File System, Windowing, and Windows Subsystem for Linux as MCP Servers for agents to interact with.

Developers can wrap desired features and capabilities in their apps as MCP servers and make them available via MCP Registry for Windows. We are introducing App Actions on Windows, a new capability for developers, which will also be available as built-in MCP servers enabling apps to provide their functionality to agents.

We are building this platform in collaboration with app developers like Anthropic, Perplexity, OpenAI and Figma who are integrating their MCP functionalities for their apps on Windows.

As Rich O’Connell, Head of Strategic Alliances from Anthropic , shared, “We’re excited to see continued adoption of the Model Context Protocol, with a thriving ecosystem of integrations built by popular services and the community. LLMs benefit substantially from connecting to your world of data and tools, and we look forward to seeing the value users experience by connecting Claude to Windows.”

And Aravind Srinivas, co-founder and CEO of Perplexity, shared, “At Perplexity, like Microsoft, we’re focused on trustworthy experiences that are truly useful. MCP in Windows brings assistive AI experiences to one of the most empowering operating systems in the world.”

And Kevin Weil, Chief Product Officer OpenAI, shared “We’re excited to see Windows embracing AI agent experiences through its adoption of the Model Context Protocol. This paves the way for ChatGPT to seamlessly connect to Windows tools and services that users rely on every day. We look forward to empowering both developers and users to create powerful, context-rich experiences through this integration.”

These early collaborations underscore our commitment to maintaining Windows as an open platform and evolving it for the agentic future. The momentum behind MCP offers incredible opportunity for developers to increase app discoverability and engagement.

Introducing App Actions on Windows, a new capability for developers to increase discoverability for their apps

We have heard from developers that remaining top of mind for their users and boosting app engagement is critical to their growth. Being a company of developers ourselves, we understand this core need deeply. That’s why we are introducing App Actions on Windows. App Actions offer a new capability for developers to increase discoverability for their apps’ features, unlocking new entry points for developers to reach new users.

Already, leading apps across productivity, creativity and communication are building with App Actions to unlock new surfaces of engagement. Zoom, Filmora, Goodnotes, Todoist, Raycast, Pieces for Developers and Spark Mail are among the first wave of developers embracing this capability.

Developers can use:

- App Actions APIs to author actions for their desired features. Developers can also consume actions developed by other relevant apps to offer complementary functionality, thereby increasing the engagement time in their apps. Developers can access these APIs in Windows SDK 10.0.26100.4188 or greater.

- App Actions Testing Playground to test the functionality and user experience of their App Actions. Developers can download the testing tool via the Microsoft Store.

Powerful AI developer workstations to meet the needs of high computation and local inferencing workloads

Developers building high computational AI workloads told us they not only need reliable software but also robust hardware for local AI development. We have partnered with a range of OEMs and silicon partners to offer powerful AI developer workstations.

OEM partners like Dell, HP and Lenovo offer a variety of Windows based systems to provide flexibility in both hardware specifications and budget. The Dell Pro Max Tower provides impressive hardware specs for powerful performance, a great option for AI model inference on GPU or CPU, and for local model fine-tuning. For processing power with space efficiency, the HP Z2 Mini G1a is a capable mini workstation. The new Dell Pro Max 16 Premium, HP Zbook Ultra G1a and Lenovo P14s/P16s are Copilot+ PCs that offer incredible mobility for developers. Learn more about these devices.

New Windows platform security capabilities

Introducing the VBS Enclave SDK (Preview) for secure compute needs

Security is at the forefront of our innovation and everything we do at Microsoft. In this era of AI, more applications have the need to protect their data from attacks by malware and even malicious users and administrators. In 2024, we introduced Virtualization Based Security (VBS) Enclaves technology to provide a Trusted Execution Environment where applications can perform secure computations, including cryptographic operations, that are protected from admin level attacks. This is the same foundation that is securing our Recall experience on Copilot+ PCs. We are now making this secure foundation capability available for developers. The VBS Enclave SDK is now available for public preview and includes a set of libraries and tools that make programming enclaves a more natural experience, developers can clone the repo here.

It starts with tooling to create an API projection layer. Developers can now define the interface between the host app and the enclave, while the tooling does all the hard work to validate parameters and handle memory management and safety checks. This allows developers to focus on their business logic while the enclave protects the parameters, data and memory. In addition, the libraries make it easy for developers to handle common tasks such as enclave creation, encrypt and decrypt data, manage thread pools and report telemetry.

Post-quantum cryptography comes to Windows Insiders and Linux

We’ve previously discussed security challenges that come along with the advancement of quantum computing and have taken steps to contribute to quantum safety across the industry, including the addition of PQC algorithms to SymCrypt, our core cryptographic library.

We will be making PQC capabilities available for Windows Insiders soon and for Linux (SymCrypt-OpenSSL version 1.9.0.). This integration is an important first step in enabling developers to experiment with PQC in their environments and assess compatibility, performance and integration with existing security systems. Early access to PQC capabilities helps security teams identify challenges, optimize strategies and ease transitions as industry standards evolve. By proactively addressing security concerns with current cryptographic standards, we are working to pave the way for a digital future that realizes the benefits of quantum and mitigates security risks. Learn more.

New experiences designed to empower every developer to be more productive on Windows 11

Windows Subsystem for Linux (WSL) offers a robust platform for AI development on Windows by making it easy to run Windows and Linux workloads simultaneously. Developers can easily share files, GUI apps, GPUs and more between environments with no additional setup.

Announcing Windows Subsystem for Linux is now open source

We are thrilled to announce we are open sourcing Windows Subsystem for Linux. With this, we are opening up the code that creates and powers the virtual machine backing WSL distributions and integrates it with Windows features and resources for community contributions. This will unlock new performance and extensibility gains. This is an open invitation to the developer community to help us integrate Linux more seamlessly into Windows and make Windows the go-to platform for modern, cross-platform development.

In fact, looking back, open sourcing WSL was the very first issue filed on the repository. At that time, all the logic for the project was inseparable from the Windows image itself, but since then we have made changes to WSL 2 distros and delivered WSL as its own standalone application. With that we’re able to close that first request! Thank you to the amazing WSL community for all your feedback, ideas, and efforts. Learn more about WSL open source.

New improvements to popular Windows Developer tools

We know building great AI experiences starts with developer productivity – from getting devices and environments set up faster to getting all the tools needed in once place. That’s why we are announcing improvements to popular Windows developer tools like WinGet, PowerToys and Terminal.

Get code-ready faster with WinGet Configuration

Developers can effortlessly set up and replicate development environments using a single, reliable WinGet Configure command. Developers can now capture the current state of their device including their applications, packages and tools (available in a configured WinGet source) into a WinGet Configuration file. WinGet Configuration has now been updated to support Microsoft DSC V3. If installed applications and packages are DSC V3 enabled, the application’s settings will also be included in the generated configuration file. It will be generally available next month. Visit winget-dsc GitHub repository to learn more.

Introducing Advanced Windows Settings to help developers control and personalize their Windows experience

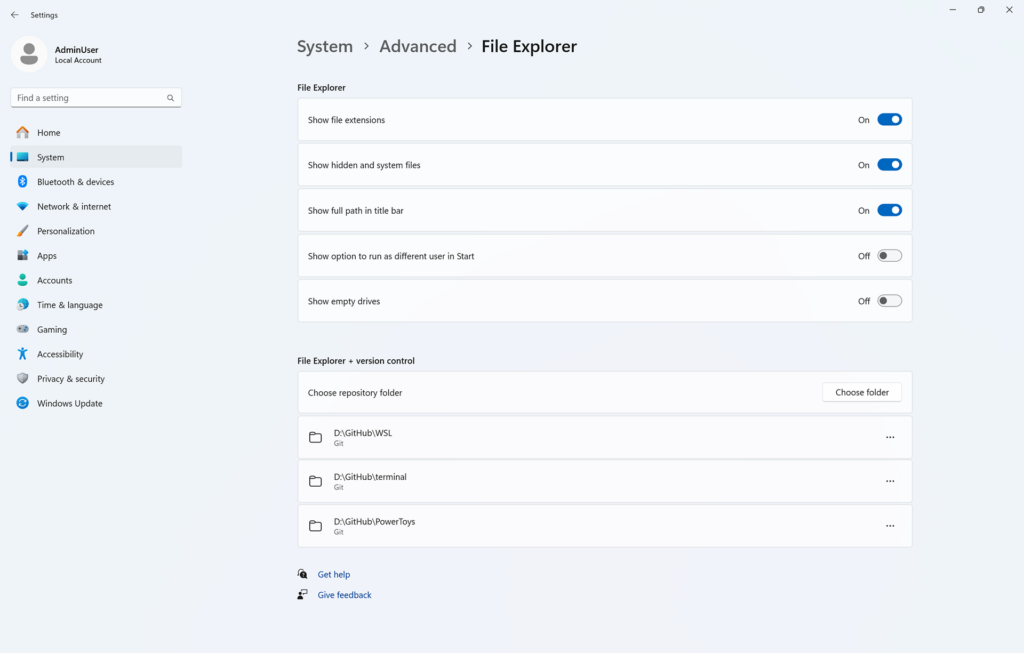

Developers and power users often face challenges in customizing Windows to meet their unique needs due to hidden or obscure settings. Advanced Windows Settings allow developers to easily control and personalize their Windows experience. They can access and configure powerful, advanced settings with just a few clicks, all from a central place within Windows Settings. These include powerful settings like enabling File Explorer with GitHub version control details. This will be coming soon in preview to the Windows Insider Program.

Introducing Command Palette in PowerToys

Command Palette, the next evolution of PowerToys Run, enables developers to reduce their context switching efforts by providing an easy way to access all their frequently used commands, applications and workflows from a single place. It is customizable, fully extensible and highly performant, empowering developers to manage interactions with their favorite tools effectively. It is now generally available.

Edit, the new command-line text editor on Windows

We are introducing a command-line text editor, Edit on Windows which can be accessed by running “edit” in the command line. This enables developers to edit files directly in the command line, staying in their current flows and minimizing context switching. It is currently open source and will be available to preview in the Windows Insider Program in the coming months. Go to GitHub Repo to learn more.

Microsoft Store: Strategic growth opportunity for app developers

The Microsoft Store is a trusted and scalable distribution channel for all Windows apps. With over 250 million monthly active users and a rapidly expanding catalog — including recent additions like ChatGPT, Perplexity, Fantastical, Day One, Docker and coming soon Notion — the Store is the largest app marketplace on Windows. And with a recently reimagined AI Hub, we’re making the Microsoft Store on Windows the go-to destination for people to discover what’s possible with AI on their devices. For those with Copilot+ PCs, we introduced a new AI Hub experience and AI Badges to spotlight experiences from Windows and the developer ecosystem.

Today, we are announcing exciting new capabilities for developers:

- Free account registration for individual developers — making it easier than ever for anyone to publish apps.

- Microsoft Store FastTrack, a new free preview program for qualified companies to submit their first Win32 app.

- Open Beta of App Campaigns, a new developer program to drive user acquisition across Store and other Microsoft surfaces.

- New discovery capabilities for apps on Windows, new acquisition and health analytic reports, actionable certification reports and a lot more.

Check out this blog to learn more.

Building for the future of AI on Windows

This past year has been incredibly exciting as we reimagined our platform capabilities to meet the needs of developers in this fast-evolving era of AI. This is just the start of our journey. We look forward to continuing to partner with our developer and MVP community, to bring innovation to our platform and tools, and enabling them to build experiences that will empower every person on the planet to achieve more.

We can’t wait to see what you will build next!